For technical leadership, the challenge is no longer just designing a functional printed circuit board (PCB). The real challenge is delivering a predictable, scalable product that maintains profitability over a five-year lifecycle.

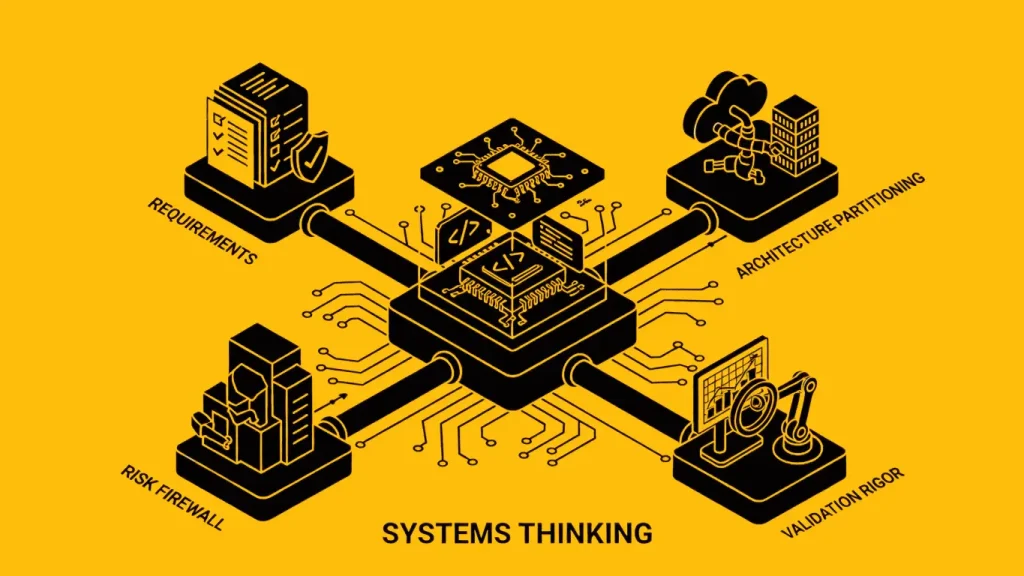

Systems Thinking is the methodology that bridges the gap between component-level design (the chip) and ecosystem-level assurance (the field).

It mandates that every engineering decision—from silicon choice to cloud telemetry—be validated against the product’s ultimate business and operational requirements. Failing to adopt this holistic approach results in systemic failures that erode margins and stall product lines.

Here is how a Systems Thinking approach transforms embedded development through three core pillars.

Pillar 1: Requirements Traceability — The Risk Firewall

Systems Thinking begins by establishing a comprehensive, bidirectional link between the highest-level product requirements and the lowest-level code commits.

- De-Risking the Spec: We convert ambiguous marketing requirements (e.g., “fast response time”) into verifiable technical specifications (e.g., “command-to-response latency ≤ 5ms under 70% network load”).

- Accelerated Root-Cause Analysis (RCA): Traceability is the foundation for efficient troubleshooting. When a fault is reported in the field, we can trace the failure mode back through the Validation Test Plan (VTP) to the originating requirement. This drastically reduces Mean Time To Resolution (MTTR).

- The ECO Control Gate: Every Engineering Change Order (ECO) is evaluated against all original requirements, acting as a firewall against regressions or performance trade-offs in unrelated subsystems.

Pillar 2: Architecture Partitioning — Scaling the Ecosystem

A component-centric view sees hardware, firmware, and cloud as separate domains. A Systems Thinking view partitions the workload across these domains to optimize performance, Total Cost of Ownership (TCO), and future scalability.

Strategic Workload Allocation

The architecture is defined by placing functions where they are most efficient:

- Hardware: Reserved for functions requiring real-time I/O control or power-constrained signal processing.

- Firmware/RTOS: Optimized for scheduling, OTA update management, and enforcing safety/security policies.

- Cloud/Edge: Utilized for non-time-critical processing, data aggregation, long-term analytics, and ML inference.

Interface Rigor

Establishing precise, immutable APIs and protocols between these partitions prevents tight coupling. This modularity ensures that a major update to the cloud backend does not necessitate a hardware re-spin, guaranteeing the system’s longevity.

Pillar 3: Validation Rigor — Assurance Across the Lifecycle

The only way to achieve predictable product success is through systemic, automated verification and validation (V&V) that mirrors the operational environment.

- Hardware-in-the-Loop (HIL) Testing: We move beyond manual bench tests. HIL systems integrate the Device Under Test (DUT) with simulated environments (thermal chambers, emulated sensors, degraded networks) to achieve >95% test coverage.

- Field Data as the Final V&V: The production telemetry pipeline is the ultimate test tool. Continuous monitoring compares field-reported performance against the baseline, triggering RCA before issues escalate into warranty events.

- Lifecycle Management: Systems thinking proactively addresses future risk, including Component End-of-Life (EOL) management and regulatory compliance, preventing manufacturing shutdowns.

From Components to Resilient Systems

Investing in Systems Thinking is investing in predictable success. It shifts development from reactive troubleshooting to proactive design assurance.

Stop designing components and start delivering systems. Your next product line requires a systemic, end-to-end approach that reduces technical debt and guarantees scalability.